Danes’ Legal Shield Against Deepfakes

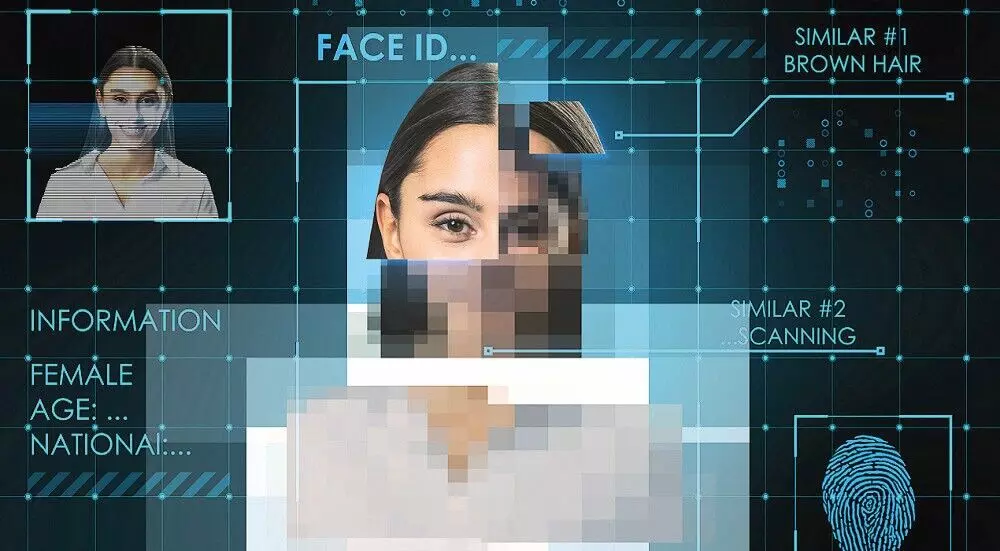

As a war on AI deepfakes begins, Danish citizens will now have the power to own their digital self and exercise full legal power to take down posts when their faces, voices and bodies are used in a deepfake even for scams, porn or politics

In what it calls the first law of its kind in Europe combating the rise of AI-generated deepfakes, Denmark is poised to become the first European country to grant individuals copyright over their own face, voice, and physical likeness.

The proposal, backed by nearly all political parties in the country, marks a significant shift in digital rights and could have far-reaching consequences for creators, tech platforms, and influencers alike.

The legislation, expected to be finalised this fall and enacted by the end of 2025, would amend Denmark’s copyright law to allow individuals to claim legal ownership of their personal appearance and voice, also meaning people in that country can demand that online platforms that use their likeness without their consent remove that content.

Once passed, this law will give people three key rights — demand removal of AI-generated content using their likeness; seek compensation if harmed; and hold platforms accountable for hosting deepfakes.

Minister for Culture Jakob Engel-Schmidt has been quoted as saying that he hoped the Bill would send a message stating that everyone deserves the right to how they sound and look.

“In the Bill, we agree and are sending an unequivocal message that everybody has the right to their own body, their own voice and their own facial features, which is apparently not how the current law is protecting people against generative AI,” he said.

“Human beings can be run through the digital copy machine and be misused for all sorts of purposes, and I’m not willing to accept that.”

Engel-Schmidt believes that tech giants will take the legislation very seriously, as violations could result in “severe fines” and could become a matter for the European Commission.

The government’s department of culture has already secured broad cross-party agreement, with nine in 10 MPs backing the plan.

The legislation will also cover nonconsensual “realistically, digitally generated imitations” of performances by artists, who could receive compensation when cases are identified.

The Ministry of Culture plans to submit a proposal before the summer recess, before submitting the amendment in the autumn. The Danish government hopes that other European Union countries will follow its lead and introduce similar legislation, and it aims to use its EU presidency to push for change.

“Of course, this is new ground we are breaking, and if the platforms are not complying with that, we are willing to take additional steps,” said Engel-Schmidt.

In practical terms, this means that if someone creates a deepfake video, synthetic voice clip, or manipulated image using your likeness without consent, you’ll have the right to demand its removal and potentially seek financial compensation.

As reported in the media, Engel-Schmidt said he wants to send a clear message that everyone owns the rights to their likeness. He claimed it was essential because people today can be copied digitally and misused in ways we couldn’t begin to conceive before.

The proposed law includes carve-outs for parody and satire to protect freedom of expression, but its core objective is to curb damaging and deceptive uses of AI, such as non-consensual deepfake pornography, scam campaigns, and fabricated political content.

For the influencer marketing industry, this legislation could be a game-changer. As generative AI tools become more accessible, the risk of brand ambassadors or creators being impersonated is growing. Unauthorised use of a creator’s likeness in branded content, AI-generated videos, or fake endorsements has the potential to damage reputations, mislead consumers, and undermine trust.

Under the new Danish law, individuals would gain a legal framework to protect their digital identity and take action when their likeness is misused, whether by influencers or private users. Platforms that fail to remove flagged content could face steep fines, putting additional pressure on tech companies to monitor and respond swiftly to AI-generated violations.

Denmark’s bold proposal is already drawing international attention. As the country prepares to assume the presidency of the EU Council in 2025, officials say they will advocate for similar protections all across Europe.

Meanwhile, a majority of Dutch MPs are backing the proposal to give citizens the copyright to their body, facial features, and voice as well to prevent people from creating AI-generated deepfakes and putting them online.

After Denmark made the announcement, GroenLinks-PvdA, VVD, NSC and D66 MPS now want to follow suit. They have also called for action against big tech companies that do not act against the dissemination of deepfakes on their platforms.

Deepfakes are also used to perpetrate bank fraud. According to figures from digital identity company Signicat, bank fraud involving deepfakes has increased by 2137 per cent in the last three years. Cases include identity fraud by taking over a person’s account using facial recognition.

Advanced AI techniques are making it increasingly difficult to distinguish real from fake, AI expert Jarno Duursma has been quoted as saying to broadcaster ‘NOS’. But despite the availability of apps, it is still not easy to create “lifelike videos”, he said.

Duursma said legislation is a good idea because it will make legal action more easily accessible for victims.

Lawyer Diego Guerrero Obando, who specialises in intellectual property law, has been quoted as saying that legal protection against appearing in a deepfake video is currently divided into portrait rights, the right to privacy, and tort, or civil wrong. To add a person’s voice to the package makes the legislation more complete, he said.

Obando said any case against big tech companies would be a “challenge” and may turn into a battle of “David against Goliath”. “An individual would have to take on an often anonymous perpetrator or a big tech platform,” he has added.

Privacy watchdog Autoriteit Persoonsgegevens (AP) is calling on people who are the victims of sexually suggestive deepfakes to report them so it can impose fines and other measures.

Duursma and Obando both warned that the new legislation could compromise freedom of expression. However, parody and satire using deepfakes would still be allowed under the proposed rules.

The EU’s AI Act classifies anything created by generative AI in one of four categories: minimal risk, limited risk, high-risk, and unacceptable risk. Deepfakes are considered “limited risk” and are therefore subject to some transparency rules.

That means there is no outright ban on deepfakes, but it forces companies to label AI-generated content on their platforms by putting watermarks on the videos, and to disclose which training sets are being used to develop their models.

If an AI company is found in breach of transparency rules, it could face a fine of up to €15 million or 3 per cent of its global turnover from the past year. That fine goes up to €35 million or 7 per cent of global turnover for banned practices.

Another article of the AI Act also bans manipulative AI, which could include systems that use subliminal or deceptive techniques to “impair informed decision-making”.

Some legal action focuses on pornography and other sexual content. For example, the EU’s directive on violence against women criminalises the “non-consensual production, manipulation or altering… of material that makes it appear as though a person is engaged in sexual activities”.

The directive includes the production of deepfakes or any materials created using AI. It doesn’t specify what the penalty should be if an individual or company is found in violation of the directive, leaving it up to each EU member state to decide. EU member states have until June 2027 to implement the rule.

In 2024, France passed an update to its criminal code to prohibit people from sharing any AI-created visual or audio content, such as deepfakes, without the consent of the person portrayed in it. Any reshared content must be clearly disclosed as AI-generated.

Distributors of these videos or audio could face up to a year in prison and a €15,000 fine; the penalty goes up to two years in prison and a €45,000 fine if the deepfake is shared through an “online service”.

The changes to the criminal code also include a specific ban on pornographic deepfakes – even if it has clear markings that indicate they are fake.

The UK has several laws that deal with the creation of deepfake pornography, including the recent amendments to the Data (Use and Access) Bill.

The law targets “heinous abusers” that create fake images for “sexual gratification or to cause alarm, distress or humiliation”. Violators could face an “unlimited fine”.

Another recent addition is a possible two-year prison sentence under the UK’s Sexual Offenses Act for those who create sexual deepfakes.

The UK’s Online Safety Act makes it illegal to share or threaten to share non-consensual sexual images on social media.

In Australia, the New South Wales government is strengthening protections against image-based abuse by outlawing the creation and distribution of sexually explicit deepfakes.

Legislation will be introduced soon to expand existing offences related to the production and distribution of intimate images without consent to cover those created entirely using artificial intelligence. It is already a crime in NSW to record or distribute intimate images of a person without their consent or to threaten to do so. This includes intimate images that have been digitally altered.